Understand the key differences between declarative vs imperative automation, and learn how adopting declarative models can drive more scalable, reliable, and trustworthy automation for modern infrastructure.

When teams first start down the automation path, they often begin with what feels familiar: imperative automation.

That’s no surprise. Manual CLI interaction is imperative by nature—you connect to a device, type a command, perform an action. So when you begin automating, you naturally encode those same steps into a script or workflow.

But the more complex your environment becomes, the more this approach starts to break down. You find yourself writing longer scripts, handling more edge cases, tracking more state, and hitting more failures. Complexity snowballs.

That’s why declarative automation matters. It offers a fundamentally different and more scalable model for building automation that’s predictable, reliable, and easier to maintain.

Declarative vs imperative automation: Core distinctions

At the heart of the distinction is this:

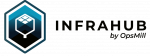

- Imperative automation focuses on how to do something. It encodes each action, step by step.

- Declarative automation focuses on what the end result should be. It describes the desired state.

In an imperative model, if you want to configure a VLAN, you might write 10 steps to make that happen. You’re telling the system exactly what to do.

In a declarative model, you simply describe the outcome: “I want this VLAN on this interface.” The system figures out the necessary steps and reconciles them against the current state.

The difference is huge. If something goes wrong halfway through an imperative workflow, your system can be left in an inconsistent state. Declarative systems, by contrast, are designed to converge on the intended state, making them inherently idempotent and easier to retry safely.

Why engineers begin with imperative automation

Nearly every engineer starts with imperative automation—because that’s what we’ve always done.

Manual CLI interaction is procedural. So when you first move into automation, it’s natural to translate that process into scripts. But as you automate more of the environment, imperative automation becomes harder to scale.

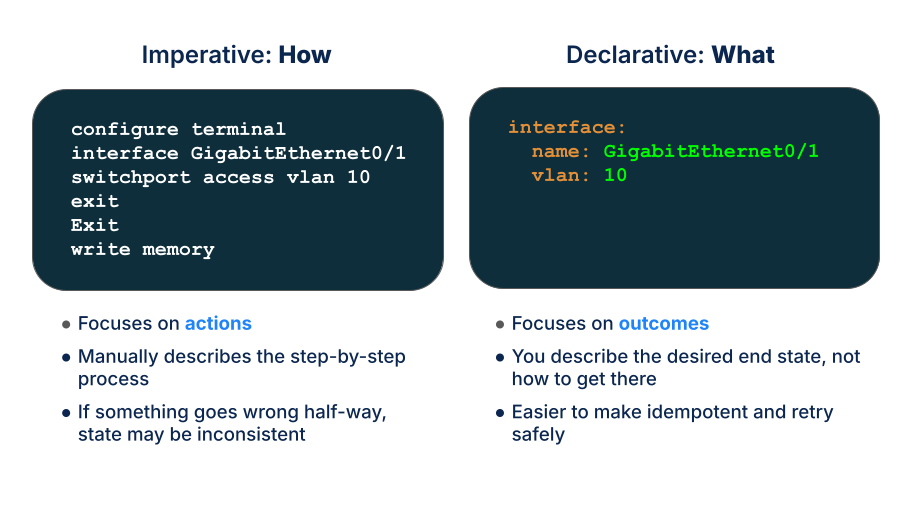

Each imperative workflow must explicitly interact with every target endpoint. As the number of endpoints and process steps grows, the complexity increases exponentially. Every time a vendor changes and you need to update the workflow, complexity increases again.

Handling state transitions gets more complex. Dependencies and failure points proliferate. Testing and debugging imperative workflows becomes a nightmare.

This snarl of complexity is exactly what declarative models are designed to avoid.

The rising importance of declarative approaches

Declarative automation has already become foundational in many parts of modern infrastructure.

In the cloud, tools like Terraform are already using this model. You define what you want—a VM, a network—and Terraform handles the logic of creating, updating, or deleting resources as needed. You’re no longer writing out step-by-step procedures for each action.

Likewise, Kubernetes has brought declarative principles to container orchestration: you describe the desired state of the system (for example, 5 replicas of an application) and the platform ensures that state is maintained.

These concepts are now spreading into network automation. Declarative automation provides a bridge toward higher-level capabilities, like self-healing networks, where procedural scripts simply can’t scale to handle the complexity.

How declarative automation builds trustworthy systems

Declarative models directly address the shortcomings of imperative workflows by breaking down complexity and embedding reliability into the system by design.

They support many of the principles that are essential for trustworthy automation:

- Predictability: Outcomes are more consistent because you define the end state.

- Idempotency: Re-applying a declarative definition won’t produce unintended changes.

- Resilience: If partial failures occur, declarative systems can retry or re-converge, reducing the risk of inconsistent state.

Declarative automation and idempotency are tied together by definition. A declarative system is inherently idempotent. You define the end state, and the system figures out how to get there, or back out of it if something goes wrong.

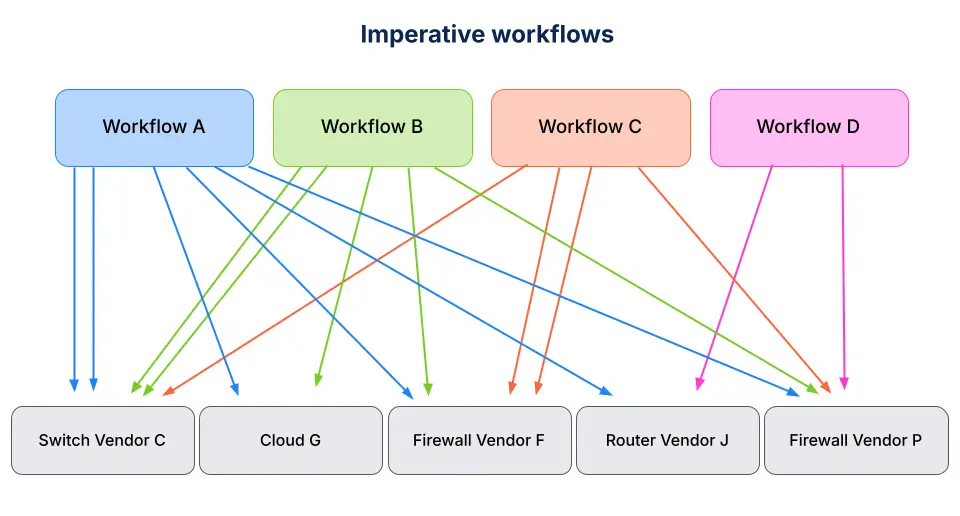

Instead of building one giant workflow that touches everything, declarative systems decompose the work. Smaller workflows—or what we call agents—are in charge of implementing the idempotent declarative workflow on each endpoint.

These agents can be developed and tested independently, which simplifies both the architecture and the engineering effort, while dramatically increasing trust in the system.

Declarative automation and trust by design

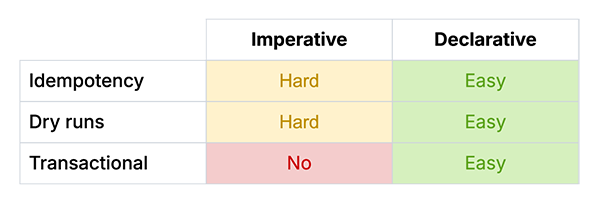

Declarative automation naturally supports the three technical foundations of trustworthy automation:

- Idempotency ensures repeatable outcomes.

- Dry runs show you exactly what changes will be made before anything is executed.

- Transactional execution ensures that changes either complete entirely or leave the system unchanged if a failure occurs.

All three of these technical foundations are easy to support with declarative automation, but difficult or impossible to achieve with imperative approaches.

How to shift from imperative to declarative automation

Moving from imperative to declarative automation can be a big mindset shift. Instead of asking “What steps do I need to automate?”, you start asking, “What state do I want the system to be in?”

That also means thinking differently about tooling and architecture.

State representation becomes central. You need a clear source of truth for intended state. Agents and controllers become part of the system, responsible for driving convergence. Testing becomes more about validating that your definitions produce the right state.

In most environments, a mix of approaches is healthy: declarative automation for common configurations at scale, imperative workflows for complex or exceptional cases like OS upgrades.

The goal isn’t to be 100% declarative, but to adopt a declarative approach wherever it reduces complexity and improves reliability.

Declarative vs imperative at scale

In modern, multi-vendor networks, automation must scale beyond what imperative approaches can handle.

As the cloud world learned years ago, declarative models provide the foundation for that scale: more predictable behavior, better error handling, simpler maintenance, and greater resilience.

Declarative thinking will increasingly shape the next generation of infrastructure automation tools and practices, not as an absolute replacement for imperative methods, but as a smarter default for most automation workflows.

For any team aiming to build infrastructure automation that scales, adapts to complexity, and earns operator trust, adopting declarative approaches is one of the smartest steps you can take.