AI agents are starting to use our infrastructure the way people do. That changes how we need to design our infrastructure automation and management systems.

Designing for human interactions is still important but now we also need to think about designing intelligently for agents.

Luckily there’s already an approach that works brilliantly with AI: schema-driven design.

Schema-driven design is the way to make systems usable at scale for both AI and humans. It gives AI clear rules, predictable data, and safe paths to act. And it gives humans validated and easily queried data too.

AI agents are the new users

AI is no longer a mere background helper or assistant to humans but an entirely new user group on its own.

I’ve been influenced in my thinking on this topic by Prefect CEO Jeremiah Lowin, who calls out the need to create “agent stories” when designing systems, much as we would have designed for “user stories” in the past:

This reframing forces us to design for a different set of needs. Agents don’t care about intuitive UIs or clever microcopy. They care about clear contracts, machine-parsable errors, composability, and minimizing the latency between their actions.

We’re already seeing that many systems previously designed for human users aren’t working particularly well for agents.

As an example, developer Ryan Stortz walks through his struggle connecting an LLM to the CLI. He describes how the terminal as it’s currently designed doesn’t give the LLM enough context, and how working with small context windows gets “gnarly” fast.

Ryan closes his post with a plea for better information architecture at the CLI level, and machine-readable interfaces that agents can navigate easily. This is the user experience future—an AI UX—that we all need to start thinking about.

Data curation drives AI performance

Our first experiments building AI at OpsMill quickly taught us a valuable lesson. We tried handing an LLM a full GraphQL schema from Infrahub. We blew the token budget before the model could even reason. Lots of inputs didn’t help. It simply overwhelmed the agent and slowed everything down.

Since then, we’ve seen that AI performance improves dramatically when you curate the input. If automation depends on good data, then AI depends on curated slices of it.

You want to define minimal, task-scoped views of your data, giving the agent only what it needs to do the next step. This keeps prompts small, reduces ambiguity, and improves accuracy and repeatability.

This “slicing” is how we ended up designing Infrahub’s MCP server, so agents can easily parse infrastructure data and stay on task without overload.

Abstraction drives AI performance

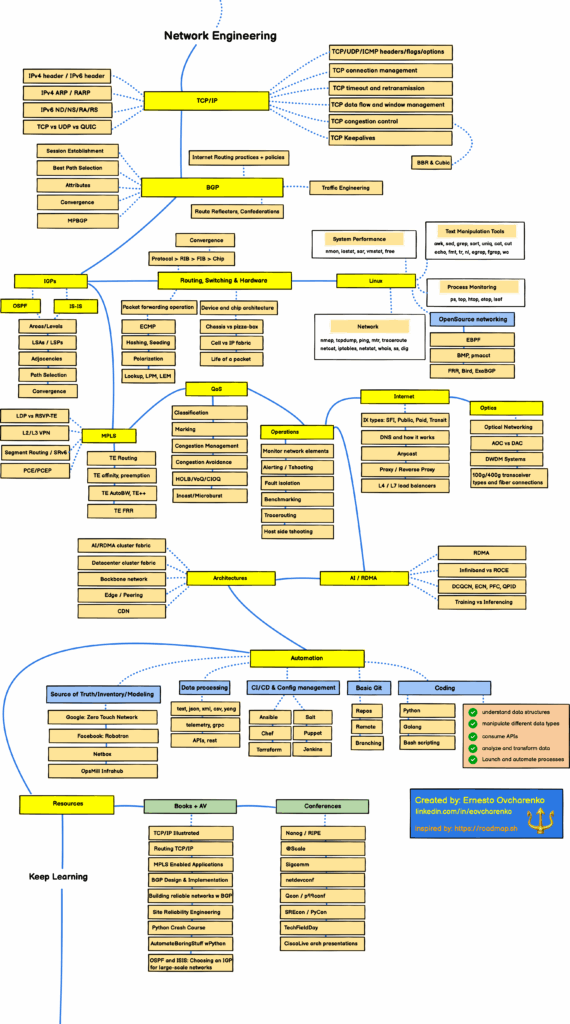

Modern network engineering is a complex domain with dozens of sub-domains and many thousands of details to learn. This roadmap from Ernesto Ovcharenko illustrates the complexity well.

Now, imagine letting an AI loose on that roadmap and expecting it to figure out where to go and what to do all on its own. You’re not going to get very helpful or accurate responses from it. And that’s even before all this general domain knowledge gets applied to a specific set of infrastructure data representing a specific environment!

Abstracting that complexity is absolutely critical to preventing the AI from getting overwhelmed.

In networking, we abstract complexity for ourselves by, for example, layering functions with the TCP/IP stack, segmenting the network with routing domains, or defining responsibilities with service models.

Agents need the same simplification. You'll have much greater success with AI if you break problems into small, schema-driven chunks, let the agent complete well-bounded tasks, then chain those tasks into larger outcomes.

To recap, curation controls what data the AI sees. Abstraction controls how the data and tasks are structured. Both are essential to building systems that AI can navigate effectively.

Schema-driven design is the foundation for AI

Schema-driven design is how we teach AI about our systems in this curated and abstracted way.

Loose JSON blobs, optional-everywhere fields, and half-documented objects cripple agents. Agents can’t infer intent or safe defaults from that kind of data.

Strong schemas do the opposite. When every field has a clear type and purpose, relationships are explicit, and descriptions are machine-parsable, agents can accurately complete discovery without a human in the loop.

GraphQL is a good example of schema-driven design in practice. It's both a query language and a schema language used to define APIs.

Created as an alternative to REST, GraphQL was designed to be more efficient by returning only the fields you ask for. That efficiency lines up directly with what AI needs: curated slices of data, not everything at once.

At its core, GraphQL is schema-driven and self-documenting, which means an agent always knows exactly what data is available and how to request it. Through introspection—the ability of an API to describe its own schema—agents can explore and plan queries without human guidance.

These qualities are a large part of why we chose GraphQL as the interface for querying data in Infrahub.

Building AI-ready frameworks

AI works best on top of frameworks—structured platforms that provide reusable building blocks and guardrails to curate data, abstract complexity, and expose safe defaults. The framework handles the heavy lifting so the AI can focus on intent and outcomes.

We’ll see more of these AI-enabling frameworks in the coming years, and they’ll become the foundation many of us build on.

This is also when we’ll see the emergence of what I call “middle code,” a term I explained on the What the Dev podcast. No-code platforms use very short scripts. High code means writing large systems from scratch.

Middle code is the space in between: scripts of a few hundred lines that AI can generate on top of a framework. I believe we’ll see much more of this middle code in the future.

Infrahub as an AI-ready framework for automation

We built Infrahub as a data management platform for powering infrastructure automation. The same traits that make it powerful for automation also make it a ready fit for enabling AI.

- Flexible schema. Clear, explicit schemas are the foundation. They eliminate ambiguity for AI agents, just as they do for humans. When every field and relation is well defined and documented, agents can navigate infrastructure data with confidence.

- GraphQL query engine. Because GraphQL was designed to return only what it’s asked for, it aligns perfectly with AI’s need for curated input. Its schema-first design and built‑in introspection let agents discover available data and relationships without human guidance.

- Knowledge graph. Infrahub is built on a knowledge graph model, which makes complex relationships explicit and queryable. This capability helps AI agents understand context and dependencies across infrastructure data.

- Git-native workflows. Branches provide the sandbox where agents can propose changes safely. Teams can review, test, and merge only what works. This keeps human oversight in the loop and ensures agent contributions don’t introduce risk.

Together, these features make Infrahub an AI‑ready source of truth: Agents can work with structured, documented, and curated data to improve accuracy and minimize hallucinations. And branching allows for any AI-recommended actions to be safely sandboxed while waiting for human review.

Rethinking system design for an AI-powered future

We’re not moving away from systems designed for humans anytime soon but we are adding a new class of user: AI agents.

Enabling those agents to do their best work means providing clear, structured data in just the right amounts, helping them navigate complexity, chunking tasks into discrete blocks, and giving them guardrails to keep them on safe paths.

Schema-driven design is the foundation that makes all of this possible. And it’s what you’ll find in Infrahub.

If you’d like to see how Infrahub applies these ideas, try our free Community edition from GitHub or request a demo from our sales team.